About Me

I am a Master Student at Stanford University. My research interest lies in the intersection of Robotics, Computer Vision and Haptic Sensing.

I have worked on robot learning at the Interactive Perception and Robot Learning Lab and surgical robotics at Collaborative Haptics and Robotics in Medicine Lab at Stanford. In summer 2024, I joined Intuitive Surgical working on Human-Robot Interaction as a Machine Learning Intern.

Prior to my Masters study at Stanford, I finished my BEng degree in Electronic and Information Engineering with the highest award at HK PolyU.

- Robotics

- Computer Vision

- Haptic Sensing

MSc in Electrical Engineering

Stanford University

BEng (Honours) in Electronic and Information Engineering

Hong Kong Polytechnic University

Exchange Student

McGill University

Experience

Research Assistant

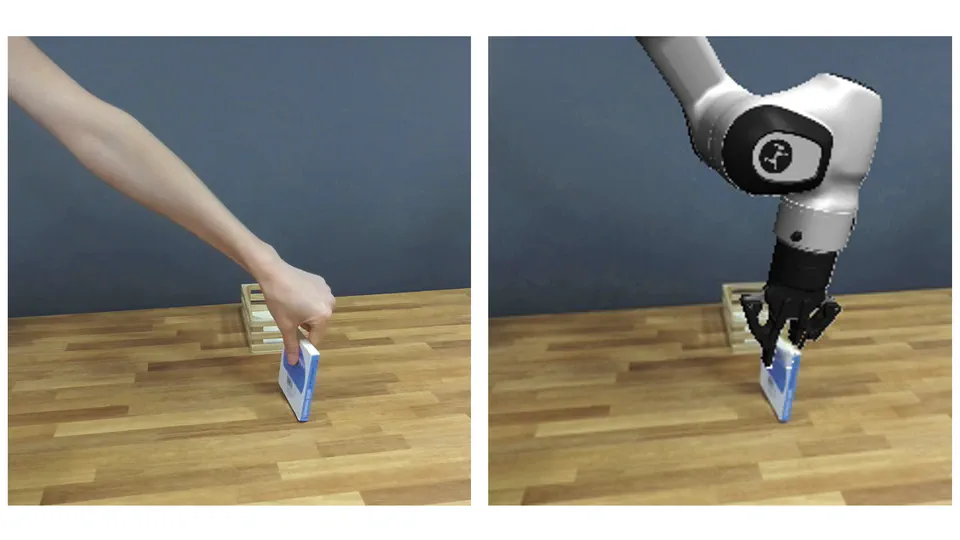

Interactive Perception and Robot Learning Lab, Stanford UniversitySlidesSupervisor: Prof. Jeannette Bohg

- Designing and implementing a cross-embodiment scheme to zero-shot transfer a policy trained on videos of humans performing a task to a robot. To be submitted in January 2025, aiming for RSS 2025.

- Evaluated Reinforcement Learning methods on robotics tasks that require fast reactive motions in Mujoco. This project is funded by Toyota Research Institute.

- Conducted joint torque feedback analysis on a large-scale robotics dataset - DROID dataset. Presented important rules of haptic data collection in future large-scale distributed robotics dataset at Stanford cross-labs robotics meeting.

Machine Learning Intern

Intuitive Surgical- Designed and implemented an end-to-end deep learning-based 3D gaze estimation algorithm. The algorithm is robust to head motions, and it improves the gaze estimation performance by 84.5%.

- Generated more than 100k synthetic images with suitable domain randomization in Blender for gaze estimation training.

- Designed real-world gaze estimation data collection pipeline and conducted data collection. Conducted detailed analysis and visualization of the dataset.

- Implemented a semi-auto labeling tool for pupil localization and segmentation using SAM2.

Research Assistant

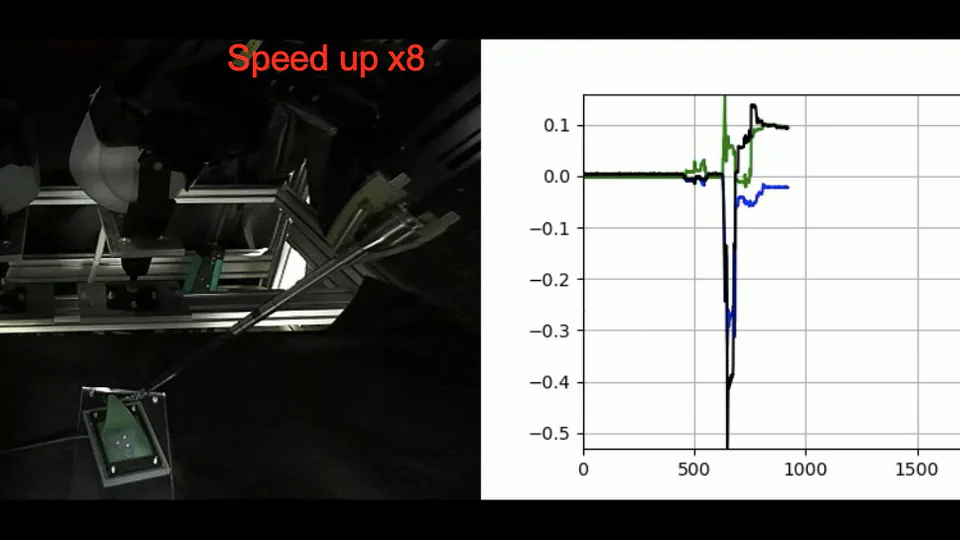

Collaborative Haptics and Robotics in Medicine Lab, Stanford UniversityPaperSupervisor: Prof. Allison Okamura

- Designed and Implemented a force-aware autonomous tissue manipulation model using imitation learning with da-Vinci Research Kit (dVRK). The task completion rate of autonomous tissue retraction increased 50% with haptic sensing.

- Paper in submission.

- Presented force-aware autonomous surgery at Stanford Human-Centered Artificial Intelligence Conference 2024.

Computer Vision Algorithm Intern

China Telecom AIChallenge- Co-led the team in the ICCV'23 Open Fine-Grained Activity Detection Challenge.

- Won third place on the video activity recognition track and second place on the video activity detection track.

Undergraduate Research Assistant

Prof. Mak's Lab, Hong Kong Polytechnic UniversityReport and CodeSupervisor: Prof. Man-Wai Mak

- Implemented deep speaker embedding for speaker verification with a domain loss to alleviate the languages mismatch problem.

- The performance of the ECAPA-TDNN (pre-trained using the English dataset) on the unlabelled Chinese dataset has improved by 10% with the MMD-based domain loss. Won the Honours Project Technical Excellence Award.

Undergraduate Research Assistant

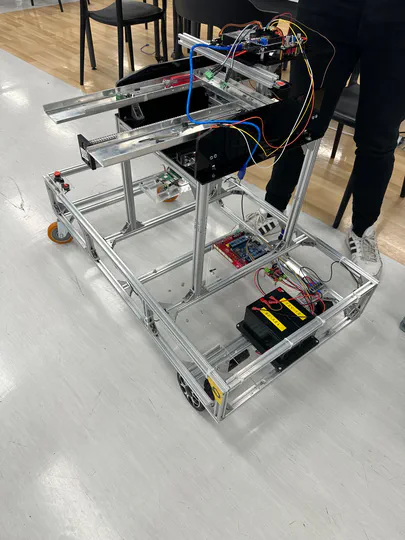

Dynamics, Estimation, and Control in Aerospace and Robotics Lab, McGill UniversityReportSupervisor: Prof. James Forbes

- Designed a finite-horizon LQR control of UGV for path tracking.

- Robot Operating System was used during implementation. The state of UGV was represented as an element of direct Euclidean isometries, SE(2).

Undergraduate Research Assistant

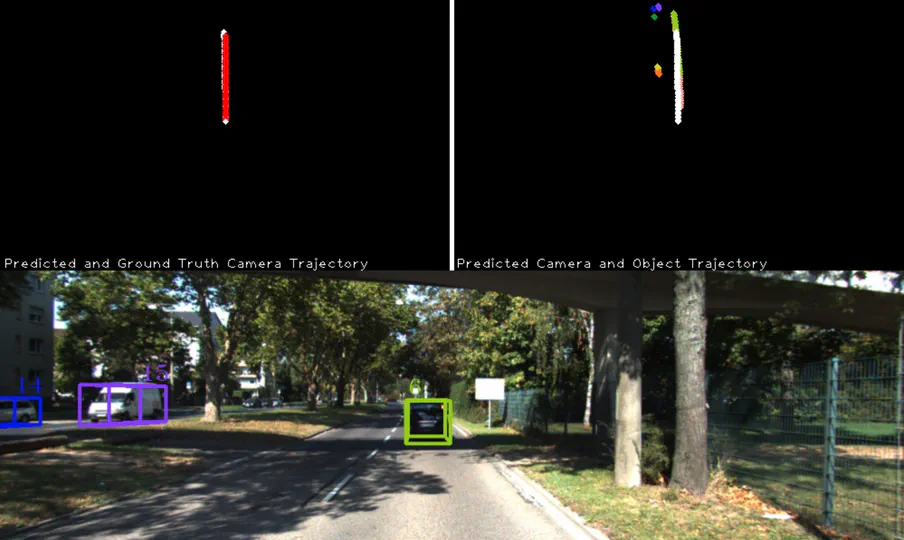

Autonomous Systems Lab, Hong Kong Polytechnic UniversitySupervisor: Prof. Yuxiang Sun

- Developed a deep learning-based integration of monocular visual odometry and multi-object tracking.

- Deployed deep optical-flow estimation for localization and 3D object detection models for 3D multi-object tracking.

Education

MSc in Electrical Engineering

Stanford UniversityGPA: 4.18/4.00

Specialization:

- Robotics

- Machine Learning

- Signal Processing

BEng (Honours) in Electronic and Information Engineering

Hong Kong Polytechnic UniversityGPA: 4.01/4.00

Minor:

- Applied Mathematics

Exchange Student

McGill UniversityGPA: 4.00/4.00

Honour Project - Technical Excellence Award

- This award aims to recognize final-year students who excel in their Honours Project.

- Sole recipient of the award in 2022/23.

Outstanding Student Award of Faculty of Engineering

- A prestigious annual honor awarded to a single distinguished final-year undergraduate student within the Faculty of Engineering, Hong Kong Polytechnic University.

- This award aims to award full-time final-year students who excel in both academic and non-academic pursuits during their studies.

Scholarship on Outstanding Performance

- This award aims to recognize outstanding local and non-local students studying Hong Kong.

- The scholarship is $80,000 HKD a year.

Here are a selection of projects that I have worked on earlier.